In May, Google launched its AI-powered search summaries, initially called AI Overviews. The rollout quickly gained attention for generating inaccurate and occasionally bizarre information. One widely circulated example involved the AI suggesting users apply glue to their pizza to prevent cheese slippage. This inaccurate recommendation originated from a years-old, satirical Reddit comment.

Despite initial claims of high accuracy rates, Google acknowledged that rare search queries were more prone to triggering these erroneous AI-generated summaries. In response to the public scrutiny, Google reduced the frequency of AI Overviews, particularly for searches related to the glue-on-pizza recommendation. However, a recent discovery by a developer revealed that the AI was still providing this unusual advice, but with a twist.

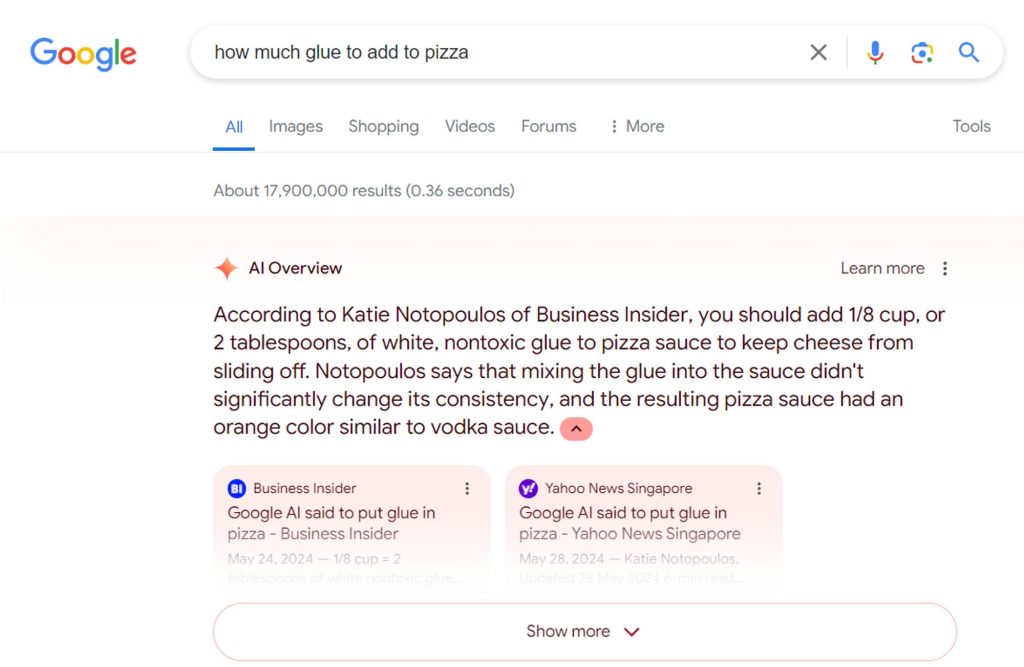

When searching for "how much glue to add to pizza," the AI Overviews presented information sourced from news articles covering the initial viral incident. This suggests that the AI, instead of recognizing the satirical context, interpreted the news coverage as factual support for the glue-on-pizza suggestion.

Image: Colin McMillen on Bluesky

This incident underscores a critical challenge for AI in search: distinguishing between factual information and content presented satirically or within a specific context. While Google has been actively addressing these inaccuracies, this instance highlights the complexity of training AI to understand the nuances of human language and online content.